Kiteworks Quadrupled Non-Brand Traffic with Quattr.

This analysis is based on publicly available information as of August 20, 2025. We update quarterly or when major AI advances rollout.

Generative answers across Google AI Overviews/Mode, ChatGPT, Gemini, and Perplexity increasingly influence pre-purchase research. The risk: models may surface third-party or outdated pages instead of your canonical sources, weakening message control and conversion paths. In this guide, AI visibility means two outcomes: (1) your brand/content appears in answers, and (2) your preferred pages are cited/mentioned.

i. Evidence-based: Every capability is traced to public materials, docs, release notes, product videos/demos, or credible coverage, available as of the date above.

ii. Practical: A buyer’s lens, what to ask and verify in demos.

iii. Actionable: A framework you can apply immediately for evaluation and pilots.

iv. Not a ranking. This is a dated snapshot to help teams cut through noise and choose next steps. Vendors can request factual corrections.

This review is authored by Quattr. We include vendors that meet explicit inclusion criteria (below). We assess only features that can be reasonably verified from public sources or reproducible tests; statements we cannot verify are treated as claims, not facts.

To make this comparison meaningful, we focused on platforms that meet two core criteria:

i. They provide AI inclusion tracking across LLMs (e.g., ChatGPT, Google AI Overviews/AI Mode).

ii. They go beyond surface-level visibility tracking and offer capabilities that actually influence how AI answer engines represent your brand, from monitoring sentiment in AI answers, to validating AI activity at the log level, to automating fixes like content structure and internal linking, all with enterprise-grade deployment controls.

We focused on five platforms, each representing a different type of Generative Engine Optimization solution:

i. Profound → Monitoring-first (visibility + benchmarking).

ii. Quattr → Execution-led (monitoring + deployment).

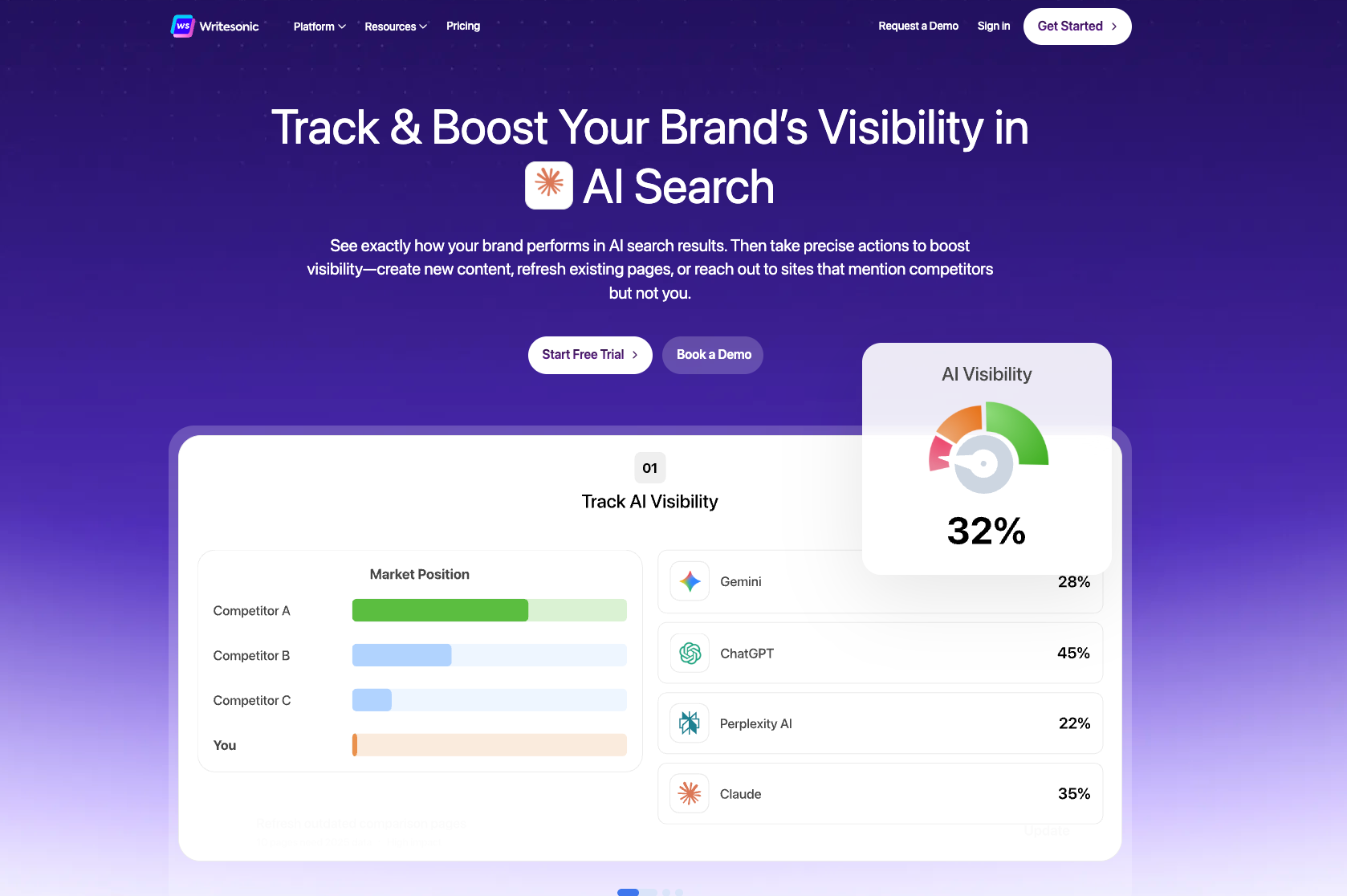

iii. Writesonic → Content-first (AI writing + GEO monitoring).

iv. AthenaHQ → Brand monitoring + sentiment.

v. BrightEdge → SEO suite extension with GEO add-ons.

Out of scope: General SEO suites or content tools without shipped GEO-specific functionality. We note GEO-adjacent offerings briefly in the appendix (e.g., Semrush, Conductor, Ahrefs), as several are evolving in this direction.

We evaluated each platform across four pillars that determine whether a Generative Engine Optimization tool can truly make an impact:

i. Be found (Visibility): Can the tool reliably track your share of voice across AI engines like ChatGPT, Perplexity, or Google AI Overviews, with data you can trust over time?

ii. Be right (Truth & Content): Does it help ensure AI systems pull from your most accurate sources by strengthening machine comprehension and internal links, cleaning up duplicates, and organizing content clearly?

iii. Ship fast (Scale): Can your team deploy fixes quickly and safely? That means things like evaluating and testing changes before they go live, having the ability to undo them if needed, and keeping a clear record of what was changed.

iv. Prove it (Impact): Can the tool show direct results, like higher inclusion in AI answers, more branded mentions, and measurable lifts in traffic or conversions?

Let us now look at each platform closely.

Profound positions itself as an AI visibility and benchmarking platform. It provides: Conversation Explorer tracks brand inclusion and share of voice in AI answers. Uses server logs to prove real-time AI crawler activity, enables submitting new content for faster AI discovery.

Its Answer Engine Insights, tracks where and how often your brand appears in AI, analyzes the sentiment and benchmark visibility against competitors. It also monitors product presence in ChatGPT Shopping, with placement tracking, competitive benchmarking, and retailer mapping.

Strengths:

i. Conversation Explorer tracks AI inclusion and share of voice in real time.

ii. Agent Analytics validates AI crawler activity at the log level (server evidence of how AI bots interpret your site).

iii. Competitive benchmarking across engines like ChatGPT, Claude, Perplexity, and Google AI Overviews.

iv. Engagement Managers: Work with customers to serve as a primary point of contact and trusted advisor for customers, guiding them through the future of search and helping them enhance AI visibility using the Profound platform.

Considerations:

i. No documented ability to deploy fixes at scale (no CMS/API path).

ii. Impact on conversions depends on the team taking action on insights.

Best for: Federated orgs or agencies that need cross-brand visibility and compliance monitoring, especially for reporting and benchmarking.

Quattr is an execution-led AI-Native GEO platform that pairs AI visibility tracking (Google AI Overviews/Mode, ChatGPT/Claude/Perplexity) with first-party data like clicks, impressions, and conversions. It offers automated internal linking governed by custom logic, anchor-text generation, and guided content optimization that strengthens semantic relevance and machine readability.

Strengths:

i. AI visibility tracking: Covers ChatGPT, Google AI Mode & AI Overviews, Claude, Gemini, and Perplexity, for citations, mentions, sentiment analysis, and share of voice. Customizable Looker dashboards and exports available.

ii. Truth & content governance: Its AI SEO agent GIGA, prioritizes content gaps, creates new content, refresh existing content for AI search readiness to make generative engines cite your pages as a trusted source. Automates internal linking so AI consistently discovers and cites the right pages.

iii. Execution at scale: Allows testing changes in a sandbox environment and scoring against competitors in AI search. Deploys changes via API, CMS plugins, or edge injection.

iv. Closed-loop analytics: Quattr connects first‑party GSC and GA4 data to AI citations and mentions, letting teams see whether those appearances drive traffic, conversions, and measurable impact.

v. Expert Growth Concierge: Offers a dedicated SEO / GEO expert who helps with selecting prompts, guides Generative Engine Optimization strategy, and extends in-house teams by collaborating on Answer Engine Optimization initiatives over regular meetings, Slack / Teams chat, etc.

Considerations: Analytics features are currently offered only within the AI SEO Suite, which is custom-priced. Currently doesn’t offer low cost month to month plans for AI visibility tracking.

Best for: Enterprise teams that want to move fast, combining AI visibility tracking with automated fixes, and prove revenue impact.

Writesonic is a content-led GEO platform that combines AI writing workflows with monitoring and analytics to help teams produce optimized copy and understand how AI crawlers interact with their sites.

Strengths:

i. AI content workflows: Structured drafting and optimization with brand-voice controls, helping teams scale content production while staying consistent.

ii. AI visibility and traffic analytics: Indicates which AI crawlers access your pages, connects to GA4 for session/behavior context, and helps prioritize updates.

iii. Page-level insights: Shows when AI crawlers last visited, which bots, and which pages were accessed, surfacing where optimizations are most urgent.

iv. Actionable recommendations: Turns monitoring into guidance, flagging missing citations, content gaps, and competitor moves, each paired with a suggested fix.

Considerations:

Large-scale site restructuring automation is not documented. Deployment automation (CMS/API/edge) is also unclear, so rollout and rollback paths should be verified.

Best for: Content-led marketing teams that want AI-aware writing tools plus basic Generative Engine Optimization monitoring.

AthenaHQ emphasizes monitoring and brand intelligence. It focuses not just on whether a brand appears in AI answers, but also on how the brand is represented.

Strengths:

i. Tracks brand presence across AI engines and Google AI Overviews.

ii. Adds competitive benchmarking and sentiment analysis, which is critical for understanding how your brand is being portrayed (positive/negative/neutral) in AI-generated answers that are often presented as objective facts.

iii. Offers connectors into GA4, GSC, and log analytics for context.

Considerations:

As an analytics-first platform, it appears to lack deep content creation or technical execution features. It provides recommendations and AI‑optimized content drafts in its Action Center, but users will need other tools to implement, including automated internal linking or schema updates.

Best for: Brand and communications leaders who need to measure representation and narrative quality in AI systems before tackling structural site fixes.

BrightEdge is an established SEO platform that has extended into Answer Engine Optimization. It builds on its existing reputation with SEO teams and adds AI visibility features to its suite.

Strengths:

i. Supports Google AI Overviews and answer‑engine output tracking via its Generative Parser and AI Catalyst and monitoring of inclusion and related insights.

ii. Provides governance and content recommendations aligned to enterprise SEO operations, helping teams standardize templates, briefs, and on‑page improvements.

iii. Offers enterprise reporting that blends traditional SEO KPIs with GEO‑adjacent metrics.

Considerations:

i. As a Generative Engine Optimization extension platform, automated deployment, such as automated linking/canonical fixes, is not publicly documented.

ii. Most GEO capabilities appear as extensions within the broader SEO suite rather than a full execution-led GEO stack.

Best for: Enterprises already standardized on BrightEdge who want to layer AI visibility and reporting into existing SEO processes without adopting a new platform.

Below is the snapshot and side-by-side comparison of publicly available evidence on the GEO tools.

Semrush has expanded into AI visibility, adding AI Overview/AI Mode tracking in Position Tracking and trend-level monitoring in Sensor(a tool within Semrush) as part of early-2025 updates.

Strengths:

Semrush’s Position Tracking detects AI Overviews/AI Mode for tracked keywords, and Sensor surfaces AI Overview visibility over time. It maintains strong briefs and on-page optimization tooling from its core SEO suite. Allows GA/GA4 integrations to provide analytics context within reporting workflows.

Considerations:

As a visibility‑led tool, Semrush does not offer automated deployment features such as internal linking. Reporting connects to GA4(analytics) for context, but visibility does not extend from specific site changes through to AI inclusions and conversions.

Best for: Enterprises already standardized on Semrush for SEO that want baseline AI visibility (AI Overviews/AI Mode) inside an existing suite, while executing Generative Engine Optimization changes through internal ops.

Conductor positions its GEO capabilities under AI Search Performance and, as of August 2025, explicitly supports Google AI Overviews (AIO) and Google AI Mode, with refinements for ChatGPT tracking.

Strengths:

Conductor provides coverage across AIO, AI Mode, and ChatGPT. It extends into sentiment and prompt-level insights, and its dashboards bring AI visibility signals together with established SEO KPIs for stakeholder reporting.

Considerations:

Conductor does not document automated deployment features such as internal linking, with execution left to CMS or engineering teams. Its reporting highlights visibility and performance, but tracking does not extend from specific site changes through to AI inclusions and conversions.

Best for: SEO teams already standardized on Conductor who want to expand into AI visibility (AIO, AI Mode, ChatGPT) while continuing to manage structural GEO execution internally.

Ahrefs entered GEO-adjacent monitoring in mid-2025 with Brand Radar, a module that tracks brand mentions and citations across AI Overviews, ChatGPT, and Perplexity.

Strengths:

Brand Radar provides clear visibility into mentions and citations across major AI engines, while Web Analytics adds an AI referral lens to measure traffic and user behavior from AI surfaces. Ahrefs’ strong research and educational resources continue to be a core value for its user base.

Considerations:

As a monitoring-led platform, Ahrefs does not support guided execution on enterprise level such as CMS or API deployment. Reporting adds an AI traffic perspective, but tracking does not extend from specific site changes through to AI inclusions and conversions.

Best for: Teams that rely on Ahrefs for research and want early AI visibility monitoring (AI Overviews, ChatGPT, Perplexity) plus traffic insights, while handling execution separately.

Before committing to a full platform, the smartest move is to run a short pilot. Here’s a simple, structured way to test whether a GEO tool can actually deliver results for your brand.

Queries: Build a panel of ~200 queries (mix of branded + non-branded).

URLs: Select ~1,000 URLs across 6–8 templates (so you test multiple page types).

Ground truth: Gather your core product/brand docs to define canonical messaging.

Goal: Define the scope so you can measure lift against a real baseline.

Entity/Schema Fixes: Add or clean up structured data (FAQ, product schema, policy pages).

Internal linking plan: Apply semantic hub-and-spoke links to surface “source-of-truth” pages.

Content refreshes: Target 30–50 key pages for rewrites or updates.

Goal: Ship enough changes to give AI systems better material to pull from.

Sandbox Testing: Deploy changes in the staging/sandbox environment first to validate effectiveness. For larger data-driven websites, consider A/B testing changes to 10–20% of URLs as a test.

Full deploy: If results look stable, roll out across the full set.

Rollback ready: Validate rollback procedures before go-live to de-risk issues.

Goal: Prove you can move at scale without breaking things.

KPIs to track:

% increase in AI inclusion for your test clusters.

% increase in brand citations across ChatGPT, Google AI Overviews, etc.

Lift in micro-conversions (sign-ups, downloads) vs. your holdout group.

Deployment quality (≤0.5% error rate on changed pages).

Goal: Tie changes directly to visibility and conversion outcomes, not just vanity metrics.

In 4 weeks, you’ll know if a GEO tool can actually deploy at scale, fix canonical truth, and prove impact. If they can’t run a pilot like this, they probably can’t handle enterprise GEO.

GEO is moving fast. Most tools today give you a piece of the puzzle, visibility, content, or monitoring. Very few bring all four pillars together.

When evaluating vendors, be cautious of screenshots or flashy charts. Look for tangible data, clear processes, working demos, and evidence of impact on conversions. That’s how you separate marketing GEO from enterprise GEO.

If you’re serious about owning your brand’s AI narrative, this framework will help you find the right partner. Very few GEO solutions stand out as execution-first: the ones that do not only measure AI visibility but also help you act on it with structured content guidance, automated internal linking, scalable deployment paths, and closed-loop analytics that connect visibility to business impact.

Choosing such an execution-led partner ensures you’re not just monitoring AI search, but actively shaping how engines represent your brand at scale, a core principle of Answer Engine Optimization (AEO).

SEO optimizes for rank‑based retrieval and clicks; GEO optimizes for inclusion/citation in AI answers. Modern platforms should unify both in one panel per topic cluster.

LLMs retrieve by meaning + authority. Internal links route authority to your “source-of-truth” pages, improving both classic SEO and the odds those pages are selected for generative answers. Linking is necessary but insufficient without entity/schema and evidence hygiene.

If you have a deployment layer or agency ops to ship structural changes for LLMs discoverability, then yes. Otherwise, you’ll stall at insights.