Kiteworks Quadrupled Non-Brand Traffic with Quattr.

Enterprise SEO doesn’t fail because teams lack insight. It fails when numbers that looked clean and achievable stop holding once execution begins; when a metric is forced to survive prioritization, budget scrutiny, and executive challenge without it being grounded in the site’s actual performance.

Most platforms offer confidence up front. Clean metrics. Stable curves. Clear winners. But that confidence is often constructed, not observed.

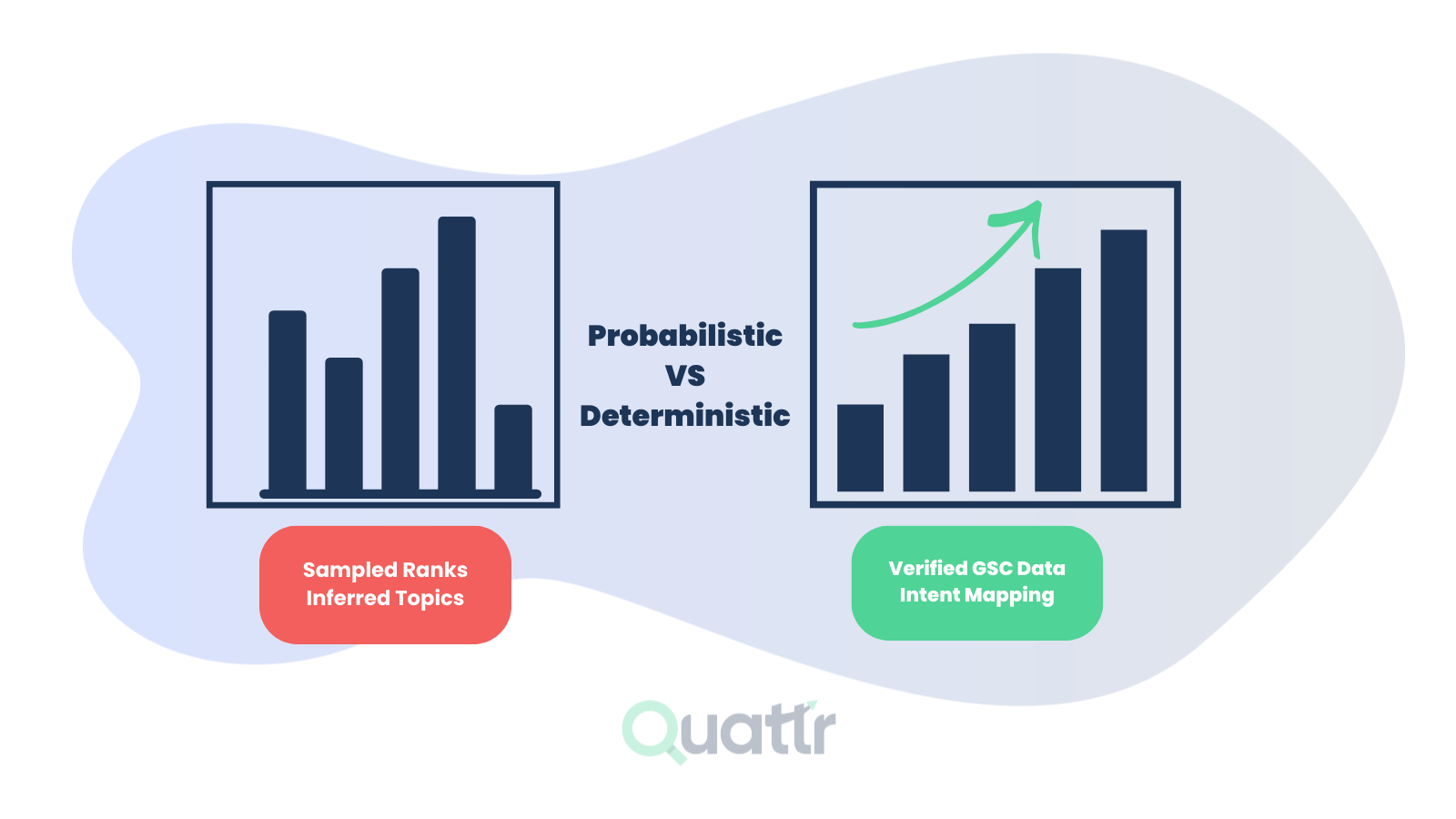

The truth is that most SEO metrics today are probabilistic at best, modeled from partial crawls, sampled SERPs, inferred keyword coverage, and statistical smoothing. These methods can suggest directional opportunity, but they aren’t anchored to how a specific website is actually performing at scale.

Collectively, they produce numbers that describe likelihood, not reality, estimates of what should happen rather than measurements of what is happening.

That distinction matters. Probabilistic data works in analysis, but breaks down when it’s used to drive execution. Once decisions depend on it, the metric is questioned, broken apart, and tested in ways inferred or modeled data can’t consistently support. Trying to justify the number only creates more uncertainty.

The result is predictable: forecasts become harder to defend, priorities stop holding under scrutiny, and the closer a decision moves toward commitment, the less weight the data carries.

8.

9.

10.

Ask any senior SEO how many of their "core metrics" represent direct measurement versus modeled estimates. Most will admit the industry’s open secret:

1. Rank distributions are sampled.

2. Visibility scores are heuristics.

3. Topic clusters are predicted embeddings.

4. AI-Answer Engine presence is inferred from partial captures.

5. Share of voice is an extrapolation built on incomplete SERP observation.

It’s not that probabilistic data is “bad.” it tells you what might be the real case, but once you want to gauge more, it breaks.

Probabilistic data is built on inference, not observation. In a sandbox, this is acceptable. At scale, it introduces a "data drift" that paralyzes governance. When your measurement:

At scale, ambiguity isn’t just a nuisance, it’s a tax on execution velocity. Probabilistic SEO data creates three structural failures that stall even the most talented teams:

1. The Execution Gap: Velocity dies when platforms "move the goalposts." When signals shift unpredictably due to sampling errors, SEO roadmaps are rewritten quarterly to chase ghosts, rather than driving long-term equity.

2. The Death of Causality: To scale, you must prove that Action A caused Revenue. Because probabilistic measurement layers are inherently unstable, you cannot isolate your impact from the tool's noise. Without causality, SEO forecasting is treated as "guesswork".

3. Market Fragmentation: Cross-market SEO requires a single source of truth. Probabilistic systems fracture under the weight of different SERP shapes and device patterns across GEOs. This forces teams into a reactive posture, constantly explaining why the data looks different, rather than executing on what makes it grow.

What enterprise SEO truly needs is not another dashboard; it’s a deterministic truth layer:

1. Consistent measurement across GEOs, SERP modes, and AI surfaces.

2. Traceable, auditable evidence behind every visibility claim.

3. Causal proof that action A led to result B.

This is where Quattr diverges from the SEO category. Rather than relying on scrape-and-guess methodologies, the platform aligns external visibility signals with raw website data to establish what actually changed, and why.

This foundation unlocks something most SEO teams still lack: true SEO experimentation. With a deterministic truth layer, Quattr enables controlled A/B testing across pages, templates, and content systems, measuring lift, regression, and neutral impact with causal confidence.

Instead of debating whether a rollout “worked,” teams can isolate variables, account for seasonality and noise, and prove outcomes before scaling execution. Deterministic truth doesn’t just improve reporting, it makes SEO testable, governable, and repeatable at enterprise scale.

Let’s look more closely at what sets Quattr apart.

The industry’s mistake isn’t using probabilistic models, it’s using them in isolation. Visibility is inferred, trendlines are projected, and outcomes are explained after the fact. What’s missing is a grounding layer that defines what is actually happening before modeling what might happen next.

Quattr changes the equation by treating Google Search Console Bulk Export and Server Logs as the engine room of its truth layer. These sources form the deterministic backbone of GIGA, Growth Intelligence, Guidance, and Automation.

GIGA establishes a semantic system of record by aligning external search and AI visibility signals with first-party telemetry from your own site. It does not replace probabilistic insight; it anchors it.

In practice, this means predictive signals are always interpreted alongside real outcomes. AI visibility is joined with actual AI-referred visits in a single trended view. Search market share is viewed in context with GA4 traffic, not abstracted away from it. Deterministic data tells you what happened; probabilistic modeling reveals what deterministic signals may be masking.

For example, deterministic performance alone can’t answer whether seasonality is suppressing demand, or whether underlying visibility has quietly eroded. Probabilistic modeling can surface that a seemingly stable trend is hiding a 7% loss in true search presence. Together, they provide a complete operational picture.

By anchoring predictive insight to causal, first-party truth, Quattr eliminates hallucination in SEO reporting. This foundation allows teams to move from estimated reach to executable causality, without losing the foresight needed to act early.

Once the truth layer exists, execution becomes straightforward and, critically, causal.

This is the purest example of deterministic execution. The Autonomous Linking API ingests the raw, deterministic search query data from GSC to find demand clusters and "missing paths," then generates highly contextual, entity-aligned link pairs with diversified, query-driven anchor text.

The Result: You aren't guessing if the links work. You are using the graph to choose the shortest, most authoritative path between an entity, an intent, and a page.

Quattr’s platform is built to track and optimize for the Generative AI ecosystem (Answer Engine Optimization, or Generative Engine Optimization).

1. Deterministic Capture: Quattr capture verifiable citations across GenAI surfaces and match them to your GSC high-intent queries.

2. GIGA Agent: Uses deterministic data to find precise content gaps, ensuring your content is engineered to objectively exceed the benchmarks of current winners.

Quattr's Market Share Metric isn’t a score; it’s a unified visibility distribution mapped across all competitive surfaces.

1. It is linked and analyzed against its actual performance parameters, such as clicks, sessions, and impressions extracted from the GSC data.

2. It normalizes data across global markets, devices, and intent segments, allowing teams to act on a shared truth and eliminate regional volatility.

Probabilistic clustering reshuffles taxonomies every quarter. GIGA ends that loop.

Because it operates as a semantic operating system, it maintains a canonical map linking every intent, entity, and page to its defined purpose. That canonical mapping stabilizes your clusters, internal links, and content backlog even as algorithms or models shift.

The result: no more moving goalposts. Roadmaps hold, strategies persist, and every page’s role in the experience graph is known, measurable, and provable.

The industry will continue to debate AI search, zero-click behavior, and algorithmic opacity. But none of these challenges matter if the measurement layer beneath them isn’t deterministic.

Probabilistic tools tell you what might be happening. Quattr shows what is happening and then lets you act on it at scale.

Enterprises don’t win by predicting the future. They win by eliminating uncertainty in the present.

Quattr, through GIGA, semantic truth mapping, execution-led workflows, and enterprise governance, delivers what probabilistic SEO systems cannot: operational clarity at scale.

Inferred visibility estimates what might be happening based on sampling and models. Deterministic visibility is based on traceable, auditable signals, where every claim can be tied back to a verifiable source such as query-level data or captured AI citations.

AI answer engines surface content through opaque retrieval paths. If visibility is inferred rather than captured, teams can’t prove where, why, or how a brand appeared, or didn’t. This turns AI visibility into narrative reporting instead of auditable evidence.

At scale, Google Search Console, especially Bulk Export, provides one of the few sources of deterministic, query-level demand data. Long-tail queries increasingly reflect AI-mediated retrieval, making GSC a foundational signal layer rather than a diagnostic tool.

Try our growth engine for free with a test drive.

Our AI SEO platform will analyze your website and provide you with insights on the top opportunities for your site across content, experience, and discoverability metrics that are actionable and personalized to your brand.