Kiteworks Quadrupled Non-Brand Traffic with Quattr.

A leading legal intelligence platform is dedicated to making state and federal court data accessible and actionable. The platform connects legal professionals and the public with critical information across millions of programmatically generated pages by transforming complex legal documents into a powerful and easily searchable resource.

The company’s product and engineering teams partnered with Quattr to solve a problem no one had tested at scale before, not how users behave, but how search engine crawlers behave.

Instead of guessing, they designed a large-scale experiment. Quattr’s AI-powered internal links were deployed to a select set of 50,000 pages, while similar pages were intentionally left unchanged to measure the true impact.

The goal was clear: prove with data that connecting related content using semantic, AI-driven links leads to faster and sustained crawler discovery and that this effect is measurable, immediate, and repeatable.

THE CHALLENGE

The platform was publishing thousands of legal cases every day. Each one had valuable content that could bring in potential customers through search. However, the more content they published, the more difficult it became for Google to discover and crawl it effectively.

Without intelligent connections between pages, Google's crawlers couldn't determine which pages were most important or understand how topics are related to each other. This led to inefficient crawling patterns where low-value pages consumed crawler resources while high-value pages went undiscovered.

The team had already tried multiple solutions, but none worked well enough.

First attempt: Manual linking. They attempted to have people add links between related case pages. With millions of pages and thousands of new ones published daily, no team could keep up with that volume. It was simply not scalable.

Second attempt: Rule-based systems. The engineering team developed a system that automatically linked pages based on fixed attributes, such as jurisdiction, case type, and filing date. While this seemed logical, search engines evaluate content relationships based on semantic meaning and context, rather than rigid database rules. The approach couldn't adapt to how search algorithms actually work.

The core issue: Without semantic connections between pages, Google's crawlers treated millions of unique legal cases as undifferentiated content. They couldn't identify which pages held authority on specific topics. They couldn't understand topical relationships. They were crawling low-priority pages while missing critical high-conversion content.

The business impact was measurable and significant.

New cases took weeks to appear in Google search results. By the time they were indexed, competitors had already captured that traffic. When the team updated existing cases with new information, Google didn't detect the changes for days. Critical pages that could drive trial signups weren't receiving proportional crawler attention. Meanwhile, competitor sites with more effective internal link structures were capturing traffic for valuable legal queries.

The challenge extends beyond Google. ChatGPT browses the web to answer queries. Perplexity searches across sites for information. New AI search tools launch constantly, each needing to understand site architecture and content relationships.

All of these systems face the same fundamental question: How do we determine which content on a site is authoritative? How do we navigate millions of pages efficiently to find the most relevant information?

The platform needed proof that strategic internal linking would solve this discovery problem before committing significant engineering resources to a full deployment.

THE SOLUTION

The team took a scientific approach. Instead of deploying across the entire site and hoping for results, they designed a test that would provide clear, statistically valid answers.

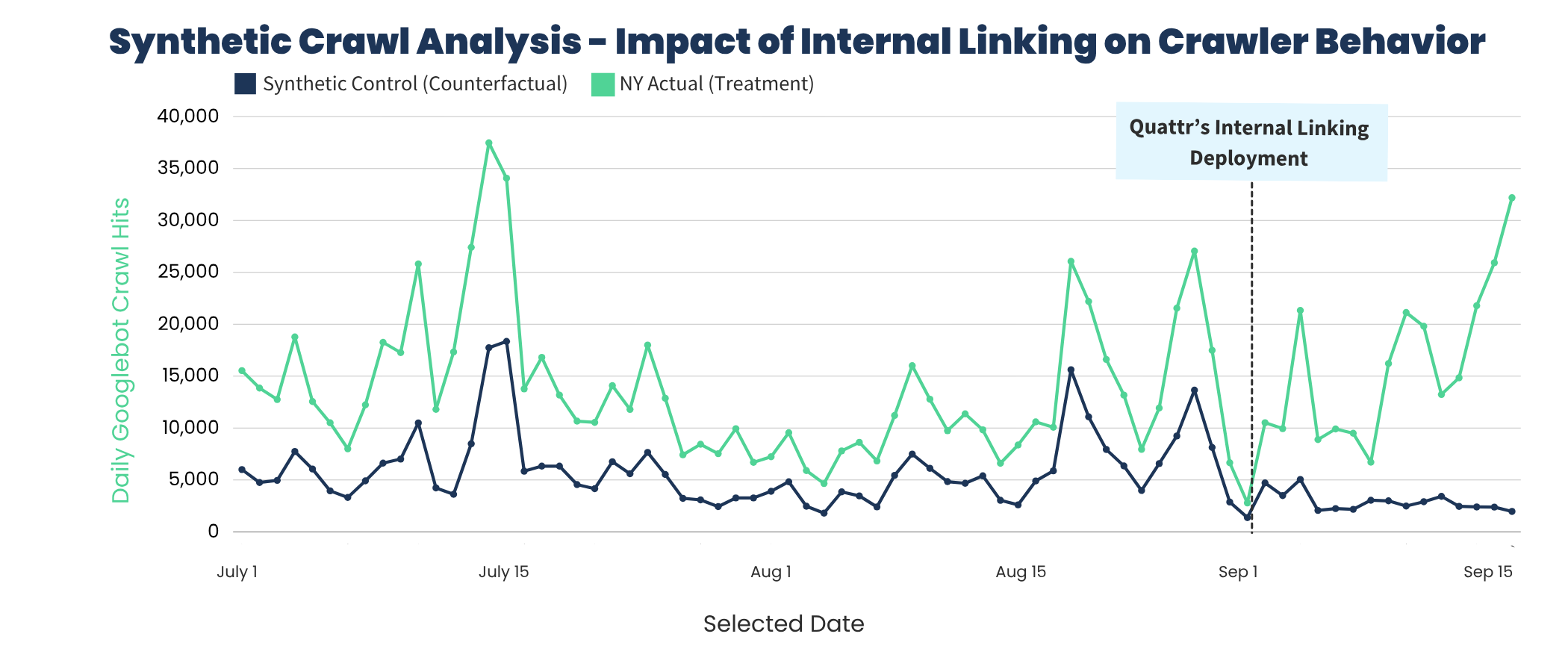

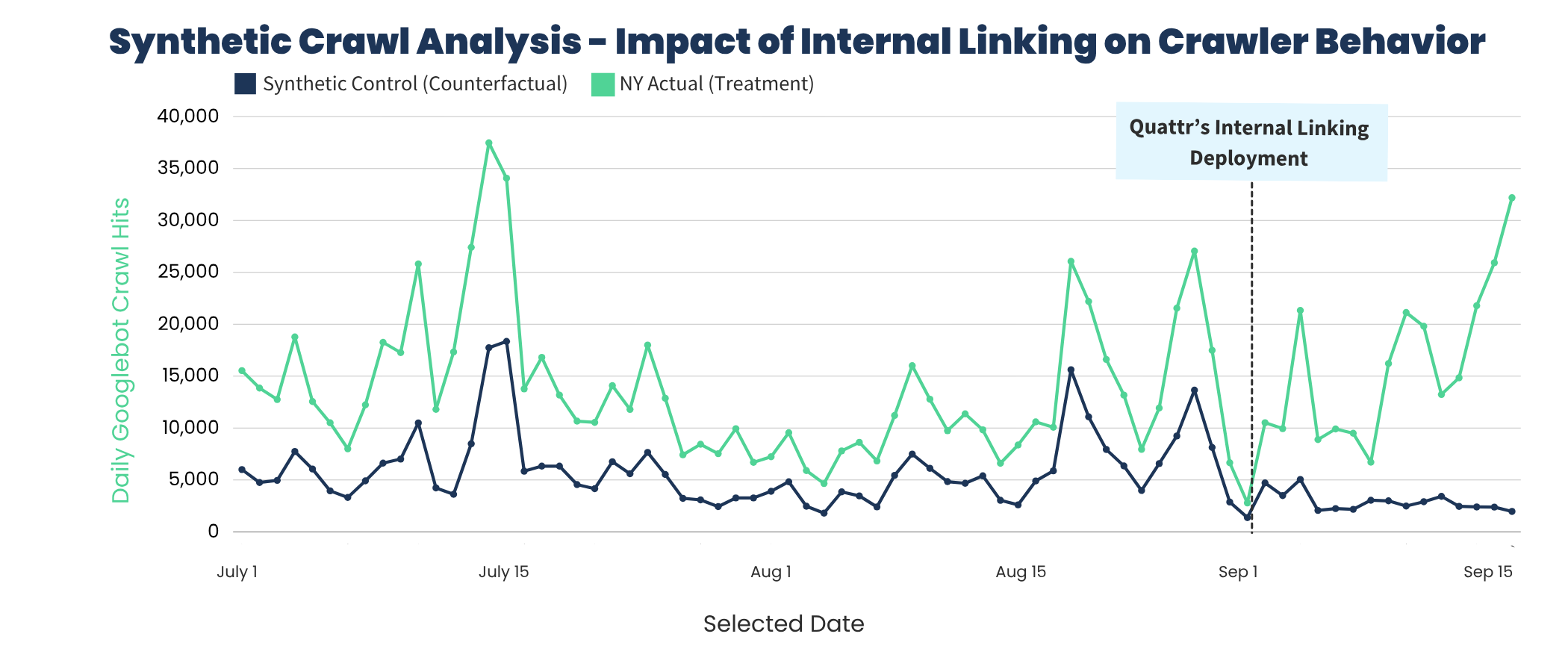

They selected approximately 50,000 case pages from New York and added 10 new internal links to each one using Quattr's technology. The deployment happened on September 3, 2025.

The critical element: They left all Florida and Massachusetts case pages completely unchanged. No new links. No modifications. This created a control group for comparison.

This matters because Google frequently makes algorithm updates. If they only measured New York pages, they couldn't distinguish between the effects of their links and those of a Google update. By maintaining Florida and Massachusetts as untreated controls, they could isolate the impact of internal linking with certainty.

The team filtered their server logs to show only actual Google crawlers from verified IP addresses in California. Many fake crawlers spoof Google's user agent, but filtering to verified IPs provided clean, accurate data about real Googlebot behavior.

They tracked 87 days of pre-intervention baseline data to understand normal crawler patterns. Then they monitored crawler activity for 35 days post-deployment.

Quattr's system uses three key approaches to create effective discovery paths for crawlers.

The system analyzes the actual content meaning, rather than just keywords or metadata, to identify conceptual relationships between pages.

A breach of contract case involving commercial real estate and a lease dispute case might use completely different legal terminology and occur in different jurisdictions. The Quattr system recognizes they're semantically related through their underlying legal concepts and business context.

This matters because Google's crawlers look for semantic signals to understand topical clusters. When they encounter links between semantically related pages, they can map the site's knowledge structure. This semantic understanding at scale mirrors how legal experts connect case law rather than how basic database queries work.

Instead of generic link text, Quattr pulls actual search queries from Google Search Console data. These are the exact phrases real users type to find content.

When users frequently search "statute of limitations contract dispute New York" and land on a specific case page, Quattr uses that phrase as anchor text when linking to related pages. This provides two benefits.

The anchor text clearly describes the content of the destination page. It also matches actual search demand, creating signals that align with what users are looking for. These demand-driven signals tell crawlers exactly what content they'll find when following the link.

Quattr monitors crawler activity continuously. Which pages receive more crawler attention? Which links do crawlers follow most frequently? What patterns emerge in crawler behavior?

The system uses this data to improve continuously. It deploys links, measures crawler response through log files, identifies high-performing patterns, reinforces successful discovery paths, and adapts to algorithmic changes.

This happens automatically. No manual updates are required. As new content is published, the system generates appropriate links. As crawler behavior evolves, it adapts its approach.

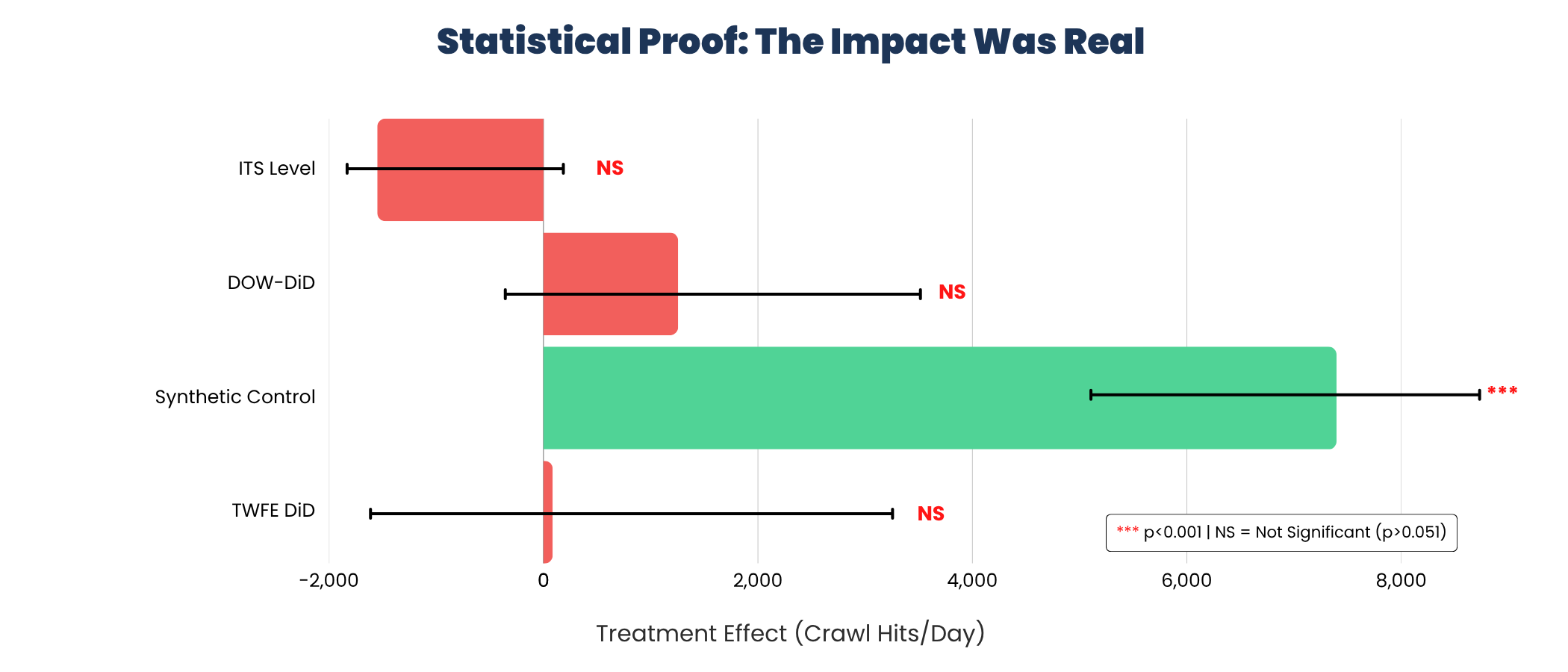

The team applied multiple statistical methods to validate findings. They used Interrupted Time Series analysis and Difference-in-Differences comparisons, the same rigorous methods used in scientific research.

The goal was to confirm that the internal links directly caused the increase in crawler activity.

They didn’t just show a pattern; they proved causation with a confidence level of p < 0.001, meaning there's less than a 0.1% chance the results happened by luck.

THE RESULT

Within 24 to 48 hours after adding the new links to New York pages, verified Googlebot activity jumped 237% compared to baseline. The Florida and Massachusetts pages (which received no changes) remained the same, confirming that the links caused the increase.

Crawls peaked at 394% as Google aggressively explored and validated the new connections. At peak, the site received 13,252 extra crawler visits per day.

After the exploration phase, crawls stabilized at 9.2% higher than before, becoming the new permanent baseline. The site continued to receive 1,380 additional crawler visits daily, totaling 259,000 extra visits over the 35 days.

This shows algorithmic memory. Google learned the site's new structure and incorporated it into its permanent crawling process.

While this experiment focused on crawler behavior, a parallel test measuring user clicks confirmed the business impact. The same AI-powered internal links drove a 15.1% increase in clicks on treated pages and a 19.4% lift compared to untouched states.

This completes the full story: smarter internal linking leads to 237% faster crawler discovery, which in turn results in 15–19% more user traffic.

And this was tested on just 50,000 pages. The platform has millions more. If scaled across the full site, the same approach is projected to generate:

1. 36,800 additional crawler visits per day (≈13.4 million per year)

2. ~300,000 additional clicks every month, based on proven conversion rates

Once deployed, the system operates autonomously, continuously generating new, semantically relevant links as content is published, with no manual maintenance required.

Quattr's AI-first platform evaluates like search engines to find opportunities across content, experience, and discoverability. A team of growth concierge analyze your data and recommends the top improvements to make for faster organic traffic growth. Growth-driven brands trust Quattr and are seeing sustained traffic growth.

And we’re happy to share the wisdom. Here are some resources curated just for you: